Beyond Code Generation: Using Large Language Models Inside Your Functions

The real unlock isn’t just generating code—it’s replacing entire functions with AI-powered intelligence

Lately, I've been spending a lot of time experimenting with AI coding tools like Lovable and Cursor. They're definitely changing how I approach development (a topic for another day!), but one specific 'unlock' I've experienced recently really caught my attention. It's the shift from just using LLMs to generate code, to actually replacing complex code inside my functions with direct LLM calls.

When I first started integrating LLMs into my coding workflow, the obvious benefit was speeding up the first draft. You write a prompt, the model generates code. It's rarely perfect right out of the box, maybe similar to collaborating with a junior developer – you still need to review, refine, simplify, and correct it. It’s a significant timesaver compared to starting from a blank page, but I noticed it usually doesn't suggest fundamentally new ways of approaching a problem. Often, it defaults to older patterns based on its training data.

Costs of LLMs have plummeted

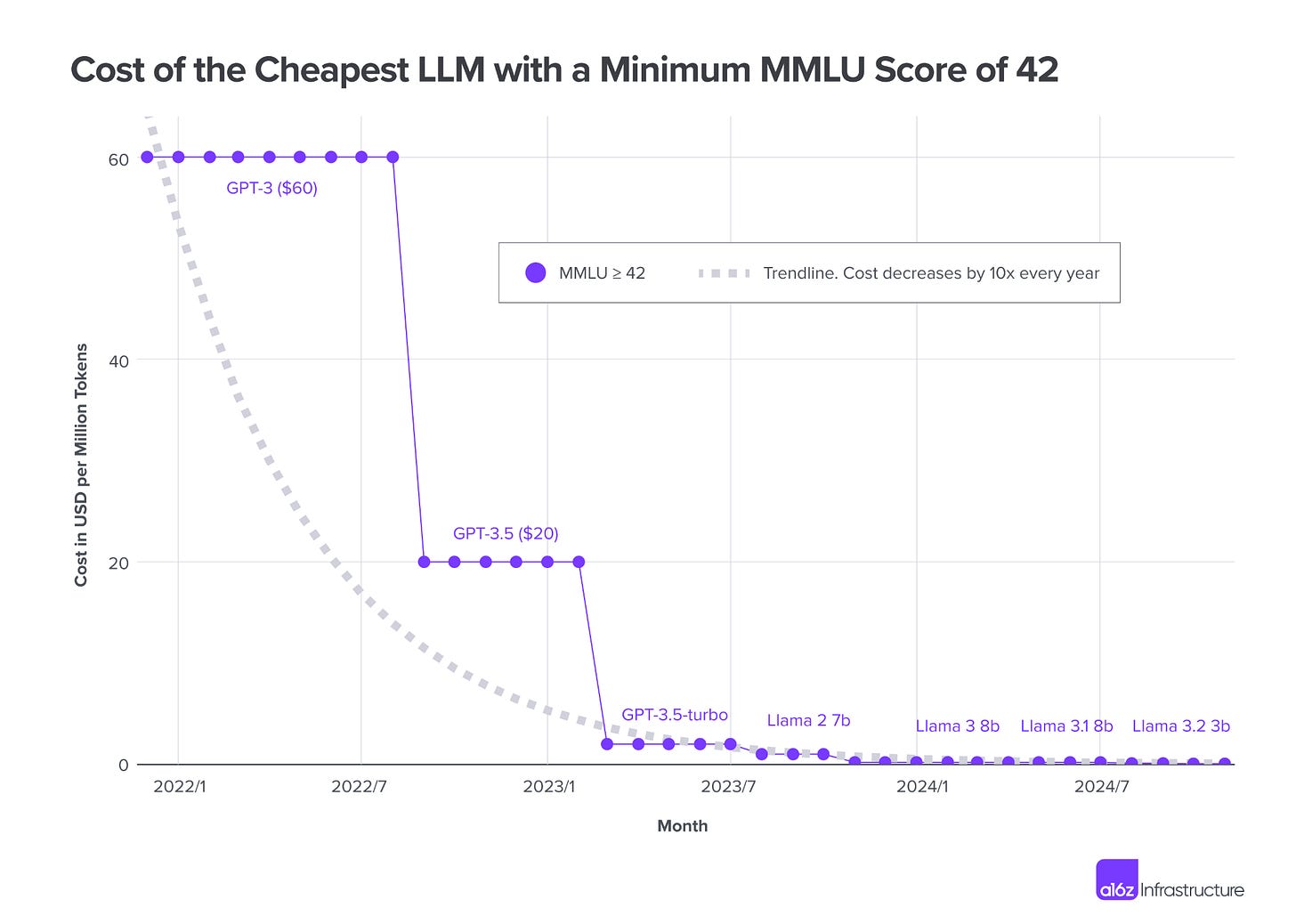

But here’s what’s enabling a truly different, more integrated approach: the absolutely staggering drop in LLM inference costs. Looking at the trends, the cost for a consistent level of model intelligence seems to have plummeted by something like 1000x in just three years – maybe from around $60 per million tokens in 2022 down to $0.06 or less today (as of early 2025). This isn't just an incremental improvement; it's a paradigm shift. Applications and techniques that were completely impractical from a cost perspective just a couple of years ago are suddenly unlocked and economically viable.

This cost reduction opened up a new pattern for me: using LLMs as the computational engine inside certain functions, replacing what previously required intricate logic, complex parsing, or brittle rule sets. Instead of writing, testing, and maintaining potentially complex code for specific tasks, you can essentially 'delegate' the core transformation or interpretation task to an LLM within the function itself.

And tools are emerging to make this pattern more robust and easier to implement. I've been particularly impressed with open-source projects like Instructor (https://github.com/instructor-ai/instructor). It provides a clean way to integrate calls to various LLMs (like models from OpenAI, Anthropic, etc.), strongly type the expected output structure using Pydantic, and automatically handle validation and even retries if the output isn't quite right.

Take a common example: parsing natural language dates like "next Tuesday at 3pm" or "end of next month." In the past, building this reliably meant wrestling with complex regular expressions, creating long chains of if/else statements, or integrating and managing a dedicated NLP library. These solutions could be rigid, hard to extend, and often a headache to debug.

Let's make this concrete. Imagine trying to build this parser manually.

The "Old Way" (Simplified Example):

You might start writing something like this, quickly realizing its limitations:

Python

import datetime

def parse_date_old(text: str) -> datetime.date | None:

"""

A simple, brittle date parser. Handles only a few specific cases.

Fails on many common inputs like "next Tuesday" or complex formats.

"""

text = text.lower().strip()

today = datetime.date.today()

if text == "today":

return today

elif text == "tomorrow":

return today + datetime.timedelta(days=1)

elif text == "yesterday":

return today - datetime.timedelta(days=1)

else:

# Try a specific format like MM/DD/YYYY (very limited)

try:

# NOTE: This simple split is naive; real parsing needs more care

month, day, year = map(int, text.split('/'))

# Basic validation (year reasonable?) - more checks needed

if 1900 < year < 2100:

return datetime.date(year, month, day)

else:

return None # Invalid year

except (ValueError, TypeError):

# This fails for "1/1/24", "Jan 1st", "next week", etc.

# Handling these requires much more complex regex, date math,

# or external libraries like dateutil.parser.

return None

# Example usage:

print(f"Old way 'tomorrow': {parse_date_old('tomorrow')}")

print(f"Old way '03/27/2025': {parse_date_old('03/27/2025')}")

print(f"Old way 'next Tuesday': {parse_date_old('next Tuesday')}") # -> Returns None or error

Building this parse_date_old function robustly to handle even a reasonable fraction of common natural language date expressions becomes a significant, ongoing engineering effort. You end up with complex, hard-to-maintain code or rely on external libraries that might still have their own quirks and limitations.

The "New Way" (Using Instructor and an LLM):

Now, contrast that with using an LLM structured output tool like Instructor:

Python

import instructor

from openai import OpenAI

import datetime

from pydantic import BaseModel, Field, BeforeValidator

from typing import Annotated

import os # For API Key loading

# Define the desired output structure using Pydantic

# Adding a validator for extra safety

def validate_reasonable_date(v):

if isinstance(v, datetime.date):

if 1970 < v.year < 2100:

return v

raise ValueError("Date is not reasonable or not a date object")

ReasonableDate = Annotated[datetime.date, BeforeValidator(validate_reasonable_date)]

class DateResponse(BaseModel):

extracted_date: ReasonableDate = Field(..., description="The precise YYYY-MM-DD date extracted from the text.")

try:

client = instructor.patch(OpenAI())

except Exception as e:

print(f"Failed to initialize OpenAI client. Ensure API key is set. Error: {e}")

client = None # Avoid errors later if client fails

def parse_date_new(text: str) -> DateResponse | None:

"""

Parses natural language date string using an LLM via Instructor.

Delegates core parsing logic; requires validation (handled by Pydantic/Instructor).

"""

if not client:

print("OpenAI client not initialized.")

return None

try:

# Use instructor's patched client method with response_model

response = client.chat.completions.create(

model="gpt-3.5-turbo-0125", # Or other capable models like GPT-4o mini, Claude Haiku, etc.

response_model=DateResponse, # Tell Instructor the expected structure

messages=[

{"role": "system", "content": "You are an expert date parsing assistant. Analyze the user's text and determine the single, most likely date they are referring to. Output in YYYY-MM-DD format."},

{"role": "user", "content": f"Please parse the date from this text based on today's date being {datetime.date.today().strftime('%A, %B %d, %Y')}: '{text}'"}

],

max_retries=2, # Instructor can automatically retry on validation errors

)

# If successful, Instructor returns a validated DateResponse object

return response

except Exception as e:

# Handle potential LLM errors, validation errors from Instructor, or API issues

print(f"Error parsing date '{text}' with LLM: {e}")

return None

# Example usage (assuming client initialized successfully):

if client:

# Using March 26, 2025 as today for context

print(f"New way 'tomorrow': {parse_date_new('tomorrow')}")

print(f"New way '03/27/2025': {parse_date_new('03/27/2025')}")

print(f"New way 'next Tuesday': {parse_date_new('next Tuesday')}")

print(f"New way 'End of November 2025': {parse_date_new('End of November 2025')}")

In this parse_date_new function, we define the precise structure we expect using Pydantic (DateResponse). We patch our OpenAI client using instructor.patch. Then, within the function, we simply call the LLM, passing the text and specifying response_model=DateResponse. Instructor handles the communication with the LLM, retrieves the response, and critically, validates that the response conforms to our DateResponse model (including the ReasonableDate check). The complex task of understanding "next Tuesday" relative to today, or "End of November 2025," is handled by the LLM's capabilities, not lines and lines of brittle application code.

Benefits of the New Approach

The beauty of this LLM-based approach, facilitated by tools like Instructor, includes:

Simpler Application Code: Your code focuses on what you need (a validated date), not the complex how of parsing every possible natural language variation.

Reduced Development & Maintenance: Less time spent writing, debugging, and updating intricate parsing logic.

Increased Flexibility: LLMs often handle a wider range of inputs and nuances more gracefully than hand-coded rules.

Minimal Runtime Cost: As mentioned, the cost per LLM call for tasks like this is now incredibly low, often making it economically advantageous when factoring in saved developer time.

Making it Robust

Tools like Instructor are key here because they add a layer of reliability. By allowing you to define the expected output structure (using Pydantic) and automatically handling validation (and even retries on failure), they bridge the gap between the probabilistic nature of LLMs and the need for predictable outputs in software.

Plus, for these kinds of targeted tasks, inference speed on smaller, optimized models (like GPT-3.5 Turbo, GPT-4o mini, Claude Haiku, etc.) is often surprisingly fast, making this practical for many real-time uses.

Honestly, I feel like we're just scratching the surface here. Moving from using AI to write code to using AI as functional code components opens up fascinating possibilities. It forces us to rethink how we build systems and where complexity should live. It’s an exciting time to be figuring out how these powerful, increasingly affordable tools fit into our workflows.

I can endorse the immense utility of this from my experience of implementing an ontology for an industry domain. A library like Instructor, combined with good notational approaches incorporating Pydantic, significantly help bridge the gap between the unstructured world of Generative AI and the structured, deterministic world of conventional software.